# Is Rusting?

ACTION=="add|change", KERNEL=="sd[a-z]{1,3}", ATTR{queue/rotational}=="1", ATTR{queue/scheduler}="cfq"

# LSI SSD

ACTION==“add|change”, KERNEL==“sd[a-z]{1,3}“, ATTR{queue/rotational}==“0”, ATTR{queue/scheduler}=“noop”, ATTR{queue/nr_requests}=“975”, ATTR{device/queue_depth}=“975"

OpenStack Trove disk-image-builder

The documentation for this process absolutely sucks.. and the fact that no ones updated it (shame on me for even saying that about an opensource project that i can push a branch to…) is pitiful. So.. here’s some useful info for completing an image!

Useful post:

1: https://docs.openstack.org/trove/latest/admin/building_guest_images.html

2: https://ask.openstack.org/en/question/95078/how-do-i-build-a-trove-image/

yum install epel-release git -y

git clone https://git.openstack.org/openstack/diskimage-builder

yum install python2-pip

pip install -r requirements.txt

python setup.py install

Now we clone the trove git repo and add the extra elements as a environment variable.

git clone https://github.com/openstack/trove.git

# Guest Image to be used

export DISTRO=fedora

export DISTRO_VERSION=fedora-minimal

# Guest database to be provisioned

export SERVICE_TYPE=mariadb

export HOST_USERNAME=root

export HOST_SCP_USERNAME=root

export GUEST_USERNAME=trove

export CONTROLLER_IP=controller

export TROVESTACK_SCRIPTS="/root/trove/integration/scripts"

export PATH_TROVE="/opt/trove"

export ESCAPED_PATH_TROVE=$(echo $PATH_TROVE | sed 's/\//\\\//g')

export GUEST_LOGDIR="/var/log/trove"

export ESCAPED_GUEST_LOGDIR=$(echo $GUEST_LOGDIR | sed 's/\//\\\//g')

#path to the ssh keys you want installed on the guest.

export SSH_DIR=~/trove-image/sshkeys/

export DIB_CLOUD_INIT_DATASOURCES="ConfigDrive"

# DATASTORE_PKG_LOCATION defines the location from where the datastore packages can be accessed by the DIB elements. This is applicable only for datastores that do not have a public repository from where their packages can be accessed. This can either be a url to a private repository or a location on the local filesystem that contains the datastore packages.

export DATASTORE_PKG_LOCATION=~/trove-image

export ELEMENTS_PATH=$TROVESTACK_SCRIPTS/files/elements

export DIB_APT_CONF_DIR=/etc/apt/apt.conf.d

export DIB_CLOUD_INIT_ETC_HOSTS=true

#WTF Is this?

#local QEMU_IMG_OPTIONS="--qemu-img-options compat=1.1"

#build the disk image in our home dir.

disk-image-create -a amd64 -o ~/trove-${DISTRO_VERSION}-${SERIVCE_TYPE}.qcow2 -x ${DISTRO_VERSION} ${DISTRO}-guest vm cloud-init-datasources ${DISTRO}-${SERVICE_TYPE}

Your own CentOS 7 SSL CA

Create your CA keys and install them.

mkdir ~/newca

cd ~/newca

openssl genrsa -des3 -out myCA.key 4096

openssl req -x509 -new -nodes -key myCA.key -sha256 -days 1825 -out myCA.pem

cp myCA.key /etc/pki/CA/private/cakey.pem

cp myCA.pem /etc/pki/CA/cacert.pem

touch /etc/pki/CA/index.txt

echo '1000' > /etc/pki/CA/serial

Create a certificate request and sign it.

openssl req -newkey rsa:2048 -nodes -keyout client.key -subj "/C=US/ST=Denial/L=Springfield/O=Dis/CN=www.example.com" -out client.csr

openssl ca -in client.csr -days 1000 -out client-.pem

Building Octavia Images with CentOS 7 and Haproxy

Do this in your python virtual env

pip install diskimage-builder

git clone https://github.com/openstack/octavia.git

cd octavia/diskimage-create/

./diskimage-create.sh -b haproxy -a amd64 -o amphora-x64-haproxy -t qcow2 -s 3 -i centos

openstack image create --tag amphora --container-format bare --disk-format qcow2 --file amphora-x64-haproxy.qcow2 Amphora-CentOS7-x64-Haproxy

#Or update an existing image with the tag

glance image-tag-update e4af2c6c-f7fd-4b45-a512-145282236044 amphora

Ceph Luminous convert Filestore OSD to Bluestore (No WAL/DB)

systemctl stop ceph-osd@3 umount /var/lib/ceph/osd/ceph-3/ ceph-volume lvm zap /dev/sdb ceph osd destroy osd.3 --yes-i-really-mean-it ceph-volume lvm create --bluestore --data /dev/sdb --osd-id 3

Openstack Ansible Kolla On CentOS 7 with Python VirtualEnv

Useful Links

Operating Kolla – https://docs.openstack.org/kolla-ansible/latest/user/operating-kolla.html

Advanced Config – https://docs.openstack.org/kolla-ansible/latest/admin/advanced-configuration.html

Docker Image Repo: Probably deploy one of these because the images are fairly large.

NOTE: firewalld & NetworkManager should be removed. Docker plays nice with selinux and everything works reliably. You CAN use firewalld but you will have to open up ports on the outside manually and is outside the scope of kolla.

Deployment Tools Installation:

Deploying OpenStack via Ansible is the new preferred method. This process is loose and changes every release, so heres what I have so far to deploy Rocky release successfully.

#Install deps

yum install epel-release -y yum install ansible python-pip python-virtualenv python-devel libffi-devel gcc openssl-devel libselinux-python -y

#Install docker

curl -sSL https://get.docker.io | bash

mkdir -p /etc/systemd/system/docker.service.d

tee /etc/systemd/system/docker.service.d/kolla.conf <<-'EOF'

[Service]

MountFlags=shared

EOF

systemctl daemon-reload

systemctl restart docker

systemctl enable docker virtualenv --system-site-packages /opt/openstack/ source /opt/openstack/bin/activate

pip install -U pip

#Install kolla-ansible for our release

pip install --upgrade kolla-ansible==7.0.0

pip install decorators python-openstackclient selinux python-ironic-inspector-client cp -r /opt/openstack/share/kolla-ansible/etc_examples/kolla /etc/ cp -r /opt/openstack/share/kolla-ansible/ansible/inventory/* ~ echo "ansible_python_interpreter: /opt/openstack/bin/python" >> /etc/kolla/globals.yml kolla-genpwd

Custom configurations:

As of now kolla only supports config overrides for ini based configs. An operator can change the location where custom config files are read from by editing /etc/kolla/globals.ymland adding the following line.

# The directory to merge custom config files the kolla's config files

node_custom_config: "/etc/kolla/config"

Kolla allows the operator to override configuration of services. Kolla will look for a file in /etc/kolla/config/<< service name >>/<< config file >>. This can be done per-project, per-service or per-service-on-specified-host. For example to override scheduler_max_attempts in nova scheduler, the operator needs to create /etc/kolla/config/nova/nova-scheduler.conf with content:

Ironic Kolla Configs:

Ironic needs an initramfs and kernel to boot the install image. Need to build some images with openstack Image Builder. Below is just the centos installer images.. these are not what you need. 🙂

mkdir /etc/kolla/config/ironic/ -p wget http://mirror.beyondhosting.net/centos/7.5.1804/os/x86_64/isolinux/initrd.img -O /etc/kolla/config/ironic/ironic-agent.initramfs wget http://mirror.beyondhosting.net/centos/7.5.1804/os/x86_64/isolinux/vmlinuz -O /etc/kolla/config/ironic/ironic-agent.kernel

Openstack Client Configuration:

Grab your keystone admin password from /etc/kolla/passwords.yml

kolla-ansible -i vbstack post-deploy

cat /etc/kolla/passwords.yml | grep keystone_admin_password

export OS_USERNAME=admin

export OS_PASSWORD=ttSbL92SubKgOao4Yp39ExERlSrJxhY1jUz3WaCy

export OS_TENANT_NAME=admin

export OS_AUTH_URL=http://192.168.5.201:35357/v3

export OS_PROJECT_DOMAIN_ID=default

export OS_USER_DOMAIN_ID=default

The following lines can be omitted

export OS_TENANT_ID=eddff72576f44d9e9638a50eb95957e0

export OS_REGION_NAME=RegionOne

export OS_CACERT=/path/to/cacertFile

Run ansible to configure your servers. This assumes you already created your ansible host env layout.

Running your deployment

The next steps are to run an actual deployment and create all the containers ect.

Some critical pieces in /etc/kolla/globals.yml:

openstack_release: "rocky"

Reconfigure

Redeploy changes for specific services. When you need to make 1 change to a service that will not require the restart of other services, you can specify it directly to reduce the runtime of ansible.

kolla-ansible -i inventory-config -t nova reconfigure

Tips and Tricks¶

Kolla ships with several utilities intended to facilitate ease of operation.

/usr/share/kolla-ansible/tools/cleanup-containers is used to remove deployed containers from the system. This can be useful when you want to do a new clean deployment. It will preserve the registry and the locally built images in the registry, but will remove all running Kolla containers from the local Docker daemon. It also removes the named volumes.

/usr/share/kolla-ansible/tools/cleanup-host is used to remove remnants of network changes triggered on the Docker host when the neutron-agents containers are launched. This can be useful when you want to do a new clean deployment, particularly one changing the network topology.

/usr/share/kolla-ansible/tools/cleanup-images --all is used to remove all Docker images built by Kolla from the local Docker cache.

kolla-ansible -i INVENTORY deploy is used to deploy and start all Kolla containers.

kolla-ansible -i INVENTORY destroy is used to clean up containers and volumes in the cluster.

kolla-ansible -i INVENTORY mariadb_recovery is used to recover a completely stopped mariadb cluster.

kolla-ansible -i INVENTORY prechecks is used to check if all requirements are meet before deploy for each of the OpenStack services.

kolla-ansible -i INVENTORY post-deploy is used to do post deploy on deploy node to get the admin openrc file.

kolla-ansible -i INVENTORY pull is used to pull all images for containers.

kolla-ansible -i INVENTORY reconfigure is used to reconfigure OpenStack service.

kolla-ansible -i INVENTORY upgrade is used to upgrades existing OpenStack Environment.

kolla-ansible -i INVENTORY check is used to do post-deployment smoke t

Docker Management:

- List all containers (only IDs) docker ps -aq.

- Stop all running containers. docker stop $(docker ps -aq)

- Remove all containers. docker rm $(docker ps -aq)

- Remove all images. docker rmi $(docker images -q)

SSL

ca_01.pem – this refers to your CA certificate pem file. AKA intermediate certificate?

Request Cert

openssl req -newkey rsa:2048 -nodes -keyout client.key -out client.csr

Sign Cert

openssl ca -in client.csr -days 1000 -out client-.pem -batch

CentOS 7 – SSHD Weak Diffie-Hellman / Logjam

Add this line to sshd or replace it, and remove ciphers of less than 2000 from the moduli

echo 'KexAlgorithms ecdh-sha2-nistp256,ecdh-sha2-nistp384,ecdh-sha2-nistp521, diffie-hellman-group14-sha1, diffie-hellman-group-exchange- sha1,diffie-hellman-group- exchange-sha256' > /etc/ssh/sshd_config

awk '$5 > 2000' /etc/ssh/moduli > /tmp/moduli; cp /tmp/moduli /etc/ssh/moduli

Doing things yourself, you learn that way.

I am by no means a professional at automotive paint, body work, paint correction, polishing or window tint. But I don’t think anyone should be scared to learn these skills in fear of damaging something they own. Anything can be fixed, may take some time and a little bit of money, but if you’re not constantly learning what fun is life?

I bought my 1995 Toyota Supra over the winter of 2016/2017. My Supra was in fairly “good” shape considering its a 25 year old car, however that wasn’t good enough for me!

Thanks to the help of a few close friends and youtube, I was able to learn the essential skills of doing automotive body work. This included hammering metal to fit properly, welding in patch metal for rusted panels, adding filler in low spots or rough areas and then how to “block” sand a panel to achieve a very flat surface that gives you that beautiful glassy reflection.

Moving on from sanding you get into primer and surfacer. Ever heard of this? Never in my life would I have believed someone if they told me that spraying such a thin layer of material and then sanding it could change the appearance of the final product so much. After countless coats and blocking I ended up with what experts would call a PERFECT surface to apply paint.

Feeling confident in my prep work, I hauled my car off to be painted by a professional. I chose to utilize a professional painter on this project due to the value of my car, supras in perfect condition are worth between $40,000 and $100,000… not a risk I was willing to take.

AND a few LONG weeks later! WE HAVE A PAINTED CAR!

Now the long and tedious process of reassembly begins. Fresh paint is extremely soft, in the first 30 days it goes through a process called “outgassing” during which the chemical reaction which causes the paint to harden finishes. Any sort of wax or sealer on the paint during this time would cause the paint to remain soft and easy to damage.

After carefully reassembling the car and paying a lot of attention to delicate pieces and edges of panels, you end up with a final product something like this.

But I just couldn’t stop there!

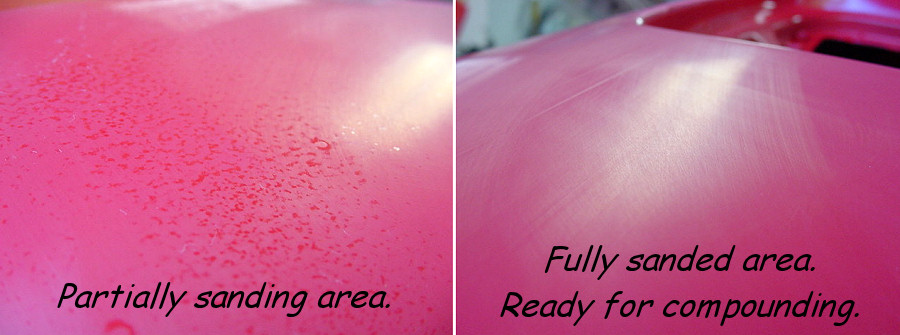

PAINT CORRECTION!

The idea behind paint correction is to “sand” the painted surface to be entirely flat. Ever been to a car show or seen a car that looks like a piece of glass with a reflection so clear you can see yourself? That’s paint correction. All paint naturally has whats called “orange peel” which is that reflective texture in the clear that looks like an orange, while paint is drying there’s a differential in the dry time which creates micro dimples on the surface because of tension.

I have more pictures, need to find them and put them here.

Engine / Electrical:

Suspension:

My car has been in ohio its whole 128,000 mile life.. needless to say it saw some winter road salt here… and well.. it fared okay but that’s simply not good enough! See the theme yet?

Sand blasted and powder coated!

Back in the car fresh as hell!

Network Switch PC

Several years ago I was given a small network switch from my high school. The switch was defective and dropped packets constantly so I wanted to give it new life.

Render

Removed the audio port riser from the motherboard so it would fit in the unit all the way.

Got a 1U heatsink for a server from dynatron. Clears the lid just perfectly with back plate!

Fired up the system to do a load test to see if the blower was adequately powerful cooling.

Got our Intel 1G nic mounted with flexible PCI-e x4 adapter cable.

Soldered the internal terminals of the uplink port to a cat5 cable and plugged it in to our nic.

Made some lexan disk mounts and airflow containment.

Stacked sata cables are a challenge to figure out and get 2 compatible cables.

Soldered USB headers onto 4 of the ethernet ports and made ethernet to usb adapter cables!

Installing linux with software raid 1.

All done, you would never know!

Build the NAS from hell from an old nimble CS460

About 5 years ago we bought some nimble storage arrays for customer services… well those things are out of production and since they have the street value of 3 pennys I figured it was time to reverse engineer and use them for other purposes.

The enclosure is made by supermicro, its a bridge bay server which has 2 E5600 based systems attached to one side of the SAS backplane and 2 internal 10G interfaces. It appears they have a USB drive to boot an image of the OS and then they store configuration on a shared LVM or some sort of cluster filesystem on the drives themselves. Each controller has what looks like a 1GB NVRam to Flash pci-e card that is used to ack writes as they come in, and get mirrored internally over the 10G interfaces.

I plan to use one controller (server) as my Plex Media box and the other one for virtual machines. The plan right now is to use BTRFS for the drives and use BCache for SSD acceleration of the block devices. I can run iSCSI over the internal interface to provide storage to the 2nd controller as VM host.

To be continued.

— Update

Found out both of my controllers had bad motherboards, one was fine with a single cpu and would randomly restart, the other wouldn’t post. I feel bad for anyone still running a nimble, its a ticking time bomb. So I grabbed 2 controllers off ebay for $100 shipped, they got here today and both were good. I went ahead and flashed the firmware to the supermicro vanilla so I could get access to the bios. I had to use the internal USB port as nimbles firmware disables the rest of the USB boot devices and the bios password is set even with defaults so you can’t login. I tried the available password on the ole interwebs but nothing seemed to work, it only accepts 6 chars but the online passwords are 8-12.

Looks like bcacheFS is gonna be the next badass filesystem now that btrfs has been dropped by redhat. Will have full write offloading and cache support like ZFS so we can use the NVRam card. Speaking of write cache, I have an email into NetList to try and get the kernel module for their 1G NVram write cache card. Worse case scenario I have to pull it out of the kernel nimble was using…

As of writing this I have both controllers running CentOS7 installed to their own partitions on the first drive in the array, and I have /boot and the boot loader installed to the 4G USB drives that nimble had their bootloader installed to.

sda 8:0 0 558.9G 0 disk sdb 8:16 0 558.9G 0 disk sdc 8:32 0 558.9G 0 disk sdd 8:48 0 558.9G 0 disk sde 8:64 0 1.8T 0 disk sdf 8:80 0 1.8T 0 disk sdg 8:96 0 1.8T 0 disk sdh 8:112 0 1.8T 0 disk sdi 8:128 0 1.8T 0 disk sdj 8:144 0 1.8T 0 disk sdk 8:160 0 1.8T 0 disk sdl 8:176 0 1.8T 0 disk sdm 8:192 0 1.8T 0 disk sdn 8:208 0 1.8T 0 disk sdo 8:224 0 1.8T 0 disk sdp 8:240 0 1.8T 0 disk sdq 65:0 0 3.8G 0 disk

And I went ahead and created an MDRaid array on 6 of the spindle disk with LVM to get started messing with it. I need to get bcachefs compiled to the kernel and give that a go, will come with time!

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdj[6] sdi[4] sdh[3] sdg[2] sdf[1] sde[0]

9766912000 blocks super 1.2 level 5, 512k chunk, algorithm 2 [6/5] [UUUUU_]

[=>...................] recovery = 7.7% (151757824/1953382400) finish=596.9min speed=50304K/sec

bitmap: 5/15 pages [20KB], 65536KB chunk

Maybe I’ll dabble with iSCSI tomorrow.

— Update

Installed Plex Tonight, spent some time getting sonarr and other msc tools for acquring metadata and video from the interballs. Also started investigating bcache and bacachefs deployment in CentOS. http://10sa.com/sql_stories/?p=1052

Also started investigating some water blocks to potentially use water cooling on my NAS… its too loud and buying different heatsinks doesn’t seem very practical when a water block is $15 on ebay

–Update

I am def going to use water cooling, the 40mm fans are really annoying and this system has rather powerful E5645 cpus which have decent thermal output. I found some 120MM aluminum radiators in ebay for almost nothing, so 2 blocks + fittings + hose is going to be around $80 per system. I need to find a cheap pump option but I think I know what I’m doing there.

Heres a picture of one of the controller modules with the fans and a cpu removed.

A 80mm fan fits perfectly and 2 of the 3 bolt holes even line up to mount it in the rear of the chassis. I will most likely order some better fans from delta with PWM/Speed capability so that the SM smart bios can properly speed them up and down. You can see that supermicro/nimble put 0 effort into airflow management in these systems. They are using 1U heatsinks with no ducting at all so airflow is “best efforts” I would guess the front cpu probably runs 40-50C most of its life simply due to the fact airflow is only created by a fixed 40mm fan in front of it.

–Update

Welp I got the news I figured I would about the NV1 card from NetList, it is EOL and they stopped driver r development for it. They were nice enough to send me ALL of the documentation and kernel module though, it supports up to kernel 2.6.38 so you could run latest centos 6 and get it supported.. maybe ill mess with that? I attached it here incase anyone wants the firmware or linux kernel module driver for the Netlist NV1. Netlist-1.4-6 Release